This vendor comparison will give you a head-to-head breakdown of the market's leading deepfake attack simulation platforms - from integrated security awareness training suites to specialist voice deepfake tools.

What is a deepfake phishing simulation?

A deepfake phishing simulation is a controlled training exercise that uses AI-generated voice/video and agentic AI conversations to mimic modern social engineering chains. It measures real behaviors (reporting, verification, data entry), produces risk scores, and feeds Security Awareness Training and incident response improvements - without real-world harm.

Using Hoxhunt, enterprises run campaigns that start with a spoofed email, pivot into an attacker-controlled meeting, and deliver a short AI-generated voice/video request before debrief and training.

2025 AI vishing & voice-cloning threats

What’s changed in 2025?

- Vishing exploded: Voice-led social engineering rose 442% YoY, as adversaries exploit the lingering trust we place in phone calls and voice.

- Deepfakes are now reality: In the first quarter of 2025 alone, there were 179 deepfake incidents, surpassing the total for all of 2024 by 19%.

- AI in the wild, but not ubiquitous: Our phishing trends report found that in a production dataset of 386k malicious emails, only 0.7%-4.7% were AI-crafted - still enough to raise success rates when paired with voice.

Deepfake attack simulation platforms comparison table

6 Best deepfake simulation platforms for enterprises

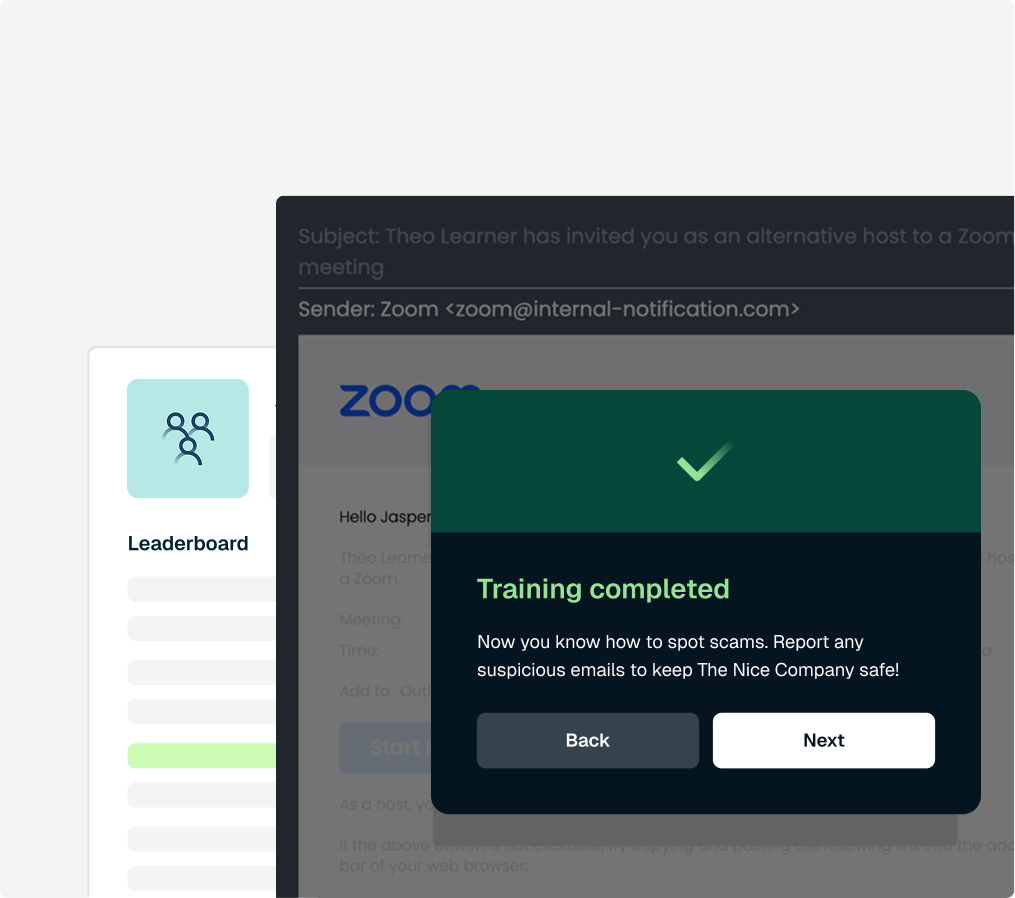

1. Hoxhunt: Deepfake attack simulation inside a human risk platform

At Hoxhunt, we've built a hyper-realistic deepfake simulations into our security awareness training and human-risk stack: a phishing email routes users to a fake Teams/Meet/Zoom page, where a cloned executive avatar (voice + video) urges action - then instant, private coaching closes the loop. It’s designed for AI social-engineering and voice phishing at enterprise scale.

What makes it different

- Multi-step, multi-channel realism: email → fake video conference → urgent request -mirrors real-life business email compromise and deepfake video conference scams your teams now face.

- Personalized, gamified training: boosts engagement and measurable behavior change - adaptive phish difficulty and micro-lessons, not one-size-fits-all.

- Human-risk analytics & risk scoring: central dashboards track reporting/time-to-report and high-risk cohorts for targeted interventions.

- Market proof: 4.8/5 on G2 (3k+ reviews), 4.9/5 on Capterra; Gartner Customers’ Choice (2024) for SAT.

Trade-offs to consider

- As an enterprise-grade platform with advanced features, Hoxhunt can be resource-intensive for smaller organizations.

- Overall, any downsides reflect the comprehensive nature of the solution - at Hoxhunt, we prioritize depth and customization over a one-size-fits-all approach.

What's actually included for deepfake training?

- Simulation flow: phishing email → attacker-controlled Teams/Meet/Zoom look-alike → AI-generated avatar drops a chat link (credential harvester) → “safe-fail” page + micro-lesson.

- Likeness/voice options: clone approved executives for AI-generated voice and deepfake videos; can run voice-only versions.

- Built-in awareness content: short modules on deepfake detection, real cases, and “guess-the-real-voice” exercises.

How it deploys in your stack

- Part of the Hoxhunt platform - not a standalone tool. Hoxhunt’s deepfake training works as a fully integrated simulation module within its broader platform.

- Delivered via email + browser - no endpoint agents; leverages existing O365/Gmail routes and simulates Teams/Google Meet/Zoom UX.

Best-fit scenarios

- Ideal for mid-size to enterprise organizations that are concerned about sophisticated social-engineering fraud, such as CEO impersonation in wire transfer requests.

- Sectors like finance, investments, and large enterprises - where a deepfake of a CFO or CEO could trigger a costly transfer - benefit significantly.

- Distributed/regulated enterprises: Teams running modern security awareness programs with measurable cyber resilience goals.

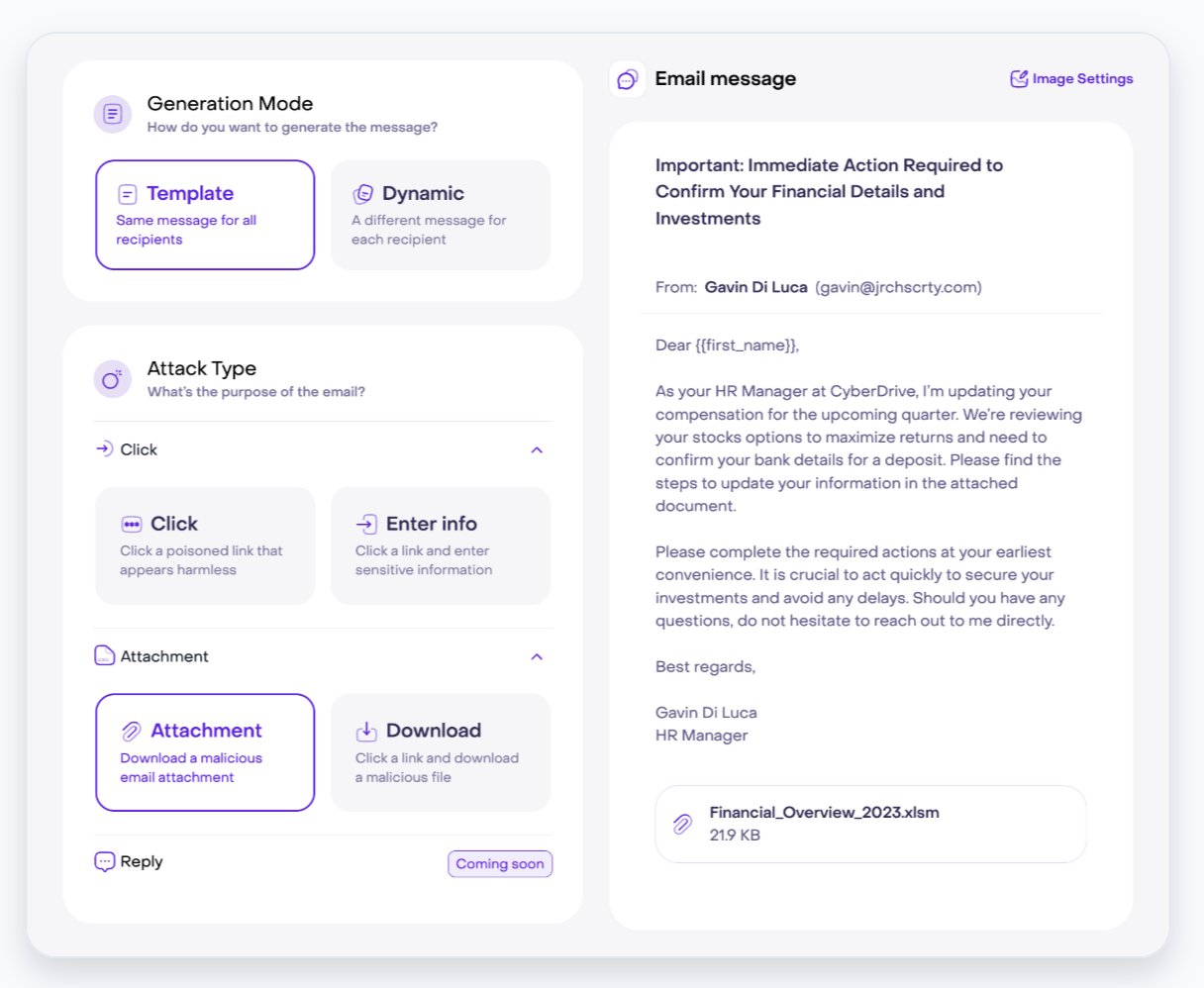

2. Jericho Security: AI-native deepfake simulations

Jericho Security is an AI-native awareness platform that runs agentic, conversational simulations - email, SMS, AI voice vishing, that adapt in real time. The startup has a DoD/AFWERX Phase II contract and a self-service 7-day trial, but it’s early-stage, so buyers should vet integrations and breadth.

What makes it different

- Agentic, two-way simulations: Jericho positions “training that talks back”: emails that continue threads, SMS that escalate, and voice prompts that adapt in real time.

- Multi-channel: Product pages highlight email/SMS plus voice phishing and deepfake-style meeting requests for modern AI social engineering.

- Accessible delivery. Self-service SaaS with a 7-day free trial.

Trade-offs to consider

- Launched recently with limited third-party reviews/case studies compared to more established vendors, expect to do more proof-of-value testing.

- Documentation markets “no-code” ease but doesn’t list a deep, public catalog of HR/SIEM/ITSM integrations; confirm your stack fit.

- Strength is AI social engineering; if you need broad compliance modules (ISO/PCI/HIPAA, policy CBTs) or more comprehensive training content you may need a second tool.

What's actually included for deepfake training?

- AI-powered training platform: accessible via the web, where admins can configure automated attack simulations and training workflows

- Conversational phishing & vishing: Campaigns that carry on a dialogue (email/SMS) and AI voice calls.

- Admin control & analytics: Self-service UI to launch simulations, track failures, response times, and training performance.

How it deploys in your stack

- Runs on the cloud via email/SMS/voice with no endpoint software; connects through standard mail routes/APIs and operates alongside your existing SAT/email security.

Best-fit scenarios

- Mid-market to enterprise with a SAT baseline that want agentic, conversational multi-channel drills to stress-test approval workflows and BEC paths.

- Finance/treasury/procurement-heavy, distributed, or high-exposure orgs where CEO/CFO impersonation or vendor bank-change fraud would be costly.

- Teams with governance/comms bandwidth (consent + helpdesk scripts) and risk dashboards to ingest outputs; if you need all-in-one training + deepfake with lower lift, Hoxhunt might be a better option.

3. Breacher.ai: Boutique deepfake red-teaming

Breacher.ai is a boutique, agentic deepfake simulation platform that runs cross-channel drills delivered as a managed service or MSP white-label. It emphasizes zero-integration deployment and patent-pending risk scoring; strong realism, but it’s an add-on, not an all-in-one training platform.

What makes it different

- High-fidelity, cross-channel simulations: Agentic AI drives voice, video, email/SMS, and meeting-lure campaigns from an admin dashboard or as a managed engagement.

- Zero-integration & MSP-ready: Can run externally with no software install; offers white-label and multi-tenant partner delivery.

- Risk scoring & reporting: Deepfake risk score benchmarks your org vs. peers for executive workflows and finance processes.

Trade-offs to consider

- It’s a specialist simulator with a narrow scope - not a broad security awareness training platform; you’ll still need SAT content, LMS/compliance, and ongoing coaching elsewhere.

- Younger vendor, fewer public references. Emerging product with limited third-party reviews; expect extra diligence and pilots.

- Positioning stresses “no integration required,” which is fast to start but lighter on directory/HRIS/SIEM connectors than mature suites - verify during scoping.

What's actually included for deepfake training?

- Fully managed deepfake simulation service: Practically, this means Breacher will work with you to understand your organization’s crown jewels and threat scenarios, then design tailored attacks.

- Managed or self-serve: Fully managed external runs, plus options for partners to deliver under their brand; older pricing collateral highlights MSP focus.

- Breacher’s cloud platform orchestrates the delivery: it can place automated calls to employees at scheduled times, send the emails, etc., with your approval. After the simulation, Breacher provides analytics: which users fell for what step, transcripts of any responses, and a risk score that quantifies the vulnerability.

How it deploys in your stack

- Breacher.ai is typically an add-on to your existing stack. If you already use a platform for your general awareness and phishing training, you’d bring in Breacher for periodic advanced simulations (perhaps a few times a year or for high-risk groups).

- It doesn’t require ripping out anything - Breacher can operate independently, sending emails and calls without complex integration.

- Since they emphasize working alongside current tools, you don’t have to disable your normal phishing tests; Breacher just goes further.

Best-fit scenarios

- Best for organizations that already have a solid awareness foundation and want to validate their readiness against top-tier threats.

- Regulated/high-stakes sectors (finance, law, defense) needing evidence that executive decision-making and financial workflows withstand AI social engineering.

- MSPs/consultancies adding a white-label deepfake offering to their catalog.

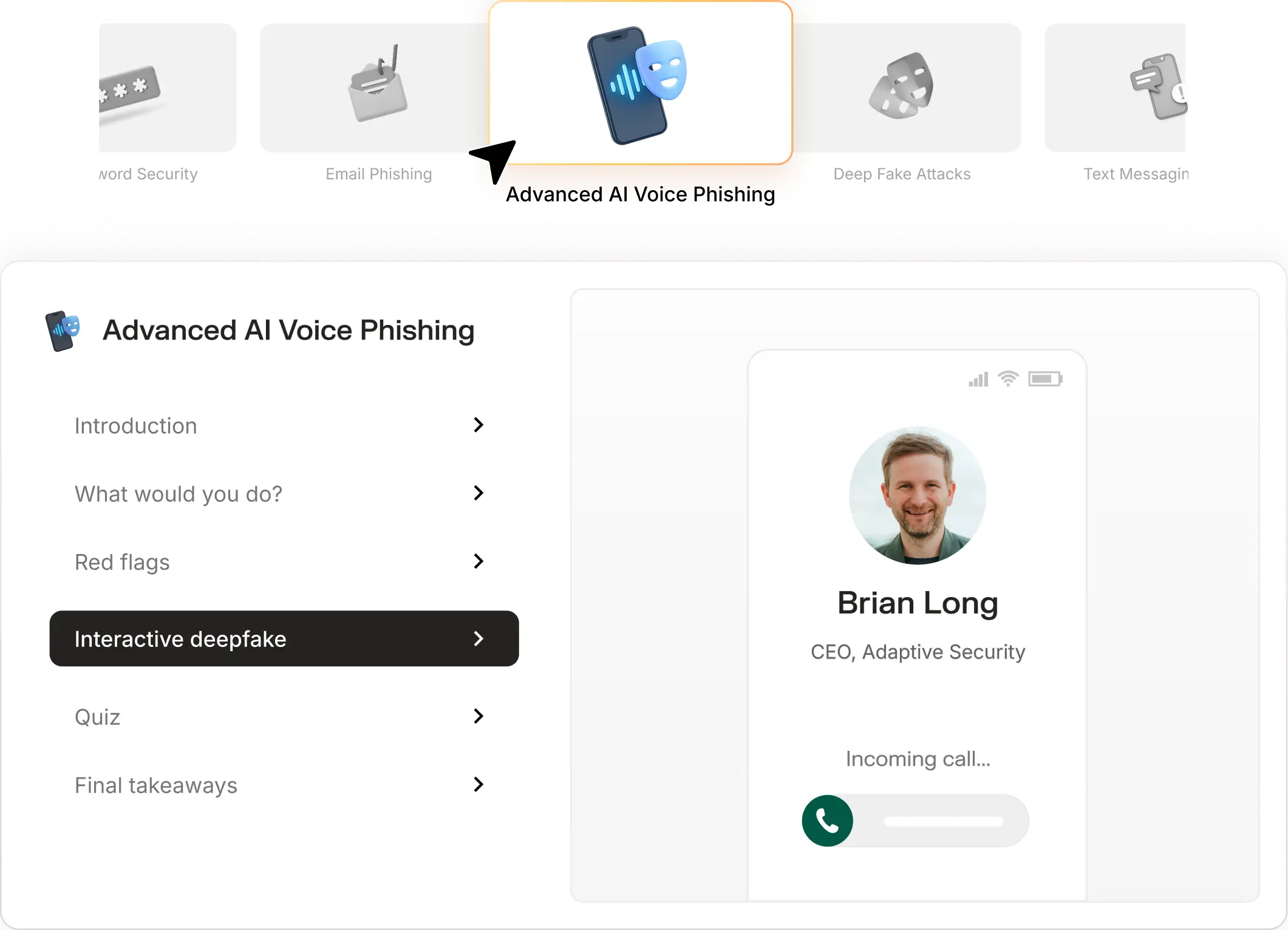

4. Adaptive Security: HRM with deepfake coverage

Adaptive Security is an awareness platform that uses LLM agents + OSINT to generate conversational, multichannel simulations with broad integrations and board-ready reporting. It’s powerful and fast-evolving, but program complexity and governance load are real trade-offs, especially for lean teams.

Why it stands out

- Agentic, personalized simulations: Campaigns pull public data (press releases, job posts, exec speeches) to craft hyper-specific lures across email/SMS/AI voice/video.

- OpenAI Startup Fund: participation: useful credibility, not proof of fit.

- Automation + integrations claims (HRIS/ITSM/Slack/API) and board-level mapping (ISO/NIST/GDPR).

Trade-offs to consider

- Features demand admin time for governance, tuning, and comms. Expect continuous iteration.

- Some capabilities remain in fast development; confirm GA vs. beta in scoping.

- OSINT-driven personalization can raise questions in regulated orgs - decide boundaries up front.

- It’s a replacement-style suite; if you only want a few deepfake drills, this is heavy compared with running them inside an existing platform.

What's actually included for deepfake training?

- Custom executive deepfakes and AI voice in campaigns: (e.g. HR memo → video portal with exec-lookalike → login lure), with instant debrief and adaptive difficulty.

- Conversational vishing/smishing: tied to the same narrative; board-ready risk reporting down to user level.

- Control Center + API: Centralized admin, SOC/BI export via public API for programmatic reporting.

How it deploys in your stack

- Adaptive Security is meant to be a platform replacement for legacy SAT (Security Awareness Training) solutions.

- It connects with your email (for sending simulations via SMTP or even directly injecting into mailboxes for realism, with Slack/Teams (to send training nudges), and with directories (to auto-provision users and segment them by role)

- If you have an LMS or intranet, Adaptive might integrate to pass training completions there too.

Best-fit scenarios

- Mid-market/enterprise with a staffed awareness/HRM function, appetite for agentic AI + OSINT, and a mandate for compliance mapping to the board.

- Teams replacing multiple tools and comfortable governing deepfake scope (likeness consent, safety cues, internal escalation).

5. Revel8: EU-native deepfake simulations

Revel8 is a Europe-based platform focused on AI social engineering and deepfake training. It leans hard on OSINT personalization (hundreds of public data points), offers adaptive playlists per user, and emphasizes EU compliance (NIS2 mindset, ISO 27001 posture). Powerful for EMEA; newer and more complex outside that lane.

Why it stands out

- Full attack-surface coverage: unified, multichannel narratives (phish → SMS → deepfake voice → video call).

- Heavy OSINT tailoring: scans 400+ public signals to hyper-personalize lures and deepfakes.

- European posture: ISO 27001:2022, NIS2-aligned messaging, EU data residency.

Trade-offs to consider

- Young, Euro-centric footprint with fewer global references; localization strongest in EMEA.

- Program complexity means more admin/governance lift.

- Dependency on public exposure means low-OSINT orgs see less “uncanny” tailoring.

- Likely covers the basics; not yet the biggest catalog compared with older suites.

- Calibration required with multi-pronged deepfake drills that can overwhelm if rolled out cold.

What's actually included for deepfake training?

- AI-driven training platform: Once you onboard (upload users and let it do an OSINT scan of your domain and employees’ public footprint), it creates risk profiles for everyone.

- Human Firewall Index score: that quantifies each person’s security awareness level.

- Adaptive simulation playlists: meaning each user or group will receive a series of simulated attacks over time, tailored to their profile

How it deploys in your stack

- Standalone awareness/HRM or augmentation for EMEA regions.

- Directory + mail integrations; cloud telephony for calls; EU data residency options.

- Reporting buttons + mobile call-reporting app; optional SIEM/SOC links.

Best-fit scenarios

- Mid-to-large EU enterprises needing NIS2-friendly deepfake/BEC training with multilingual rollout.

- Multi-country EMEA programs that want OSINT-driven realism and adaptive sequencing.

- Orgs that have seen multi-channel social engineering and want to test voice/video-enabled fraud paths.

6. Resemble AI: voice deepfake training

Resemble AI is a voice-first deepfake simulation platform that runs live, conversational vishing drills across phone, WhatsApp, and email.

Why it stands out

- Live, two-way vishing simulations: LLM “agents” hold context, handle objections, and adapt in real time, mirroring high-pressure fraud calls.

- Fast, high-fidelity cloning: Voice models spin up from ~5-10s of audio , enabling exec-impersonation drills with minimal capture time.

- Multi-channel attack chains: Scenarios span phone/voicemail, WhatsApp voice notes, SMS, email to model modern cross-channel lures.

Trade-offs to consider

- It’s voice-led deepfake training - not a full security awareness training suite. You’ll still need broader SAT, LMS/compliance, and multi-topic content. (Compare to platforms that embed deepfake simulation inside a mature SAT stack.)

- Phone-number pickup rates, caller-ID handling, and telecom setup add friction compared with email-only simulators.

- Clones are strong, but tough listeners may still catch cadence artifacts; quality hinges on sample capture and context tuning.

What's actually included for deepfake training?

- Voice-first, interactive simulations that escalate from voicemail to live call and coordinated messages; employees practice verification under pressure.

- Automated campaigns & adaptive difficulty that adjust to user performance, plus per-user/team risk scoring and board-ready reporting.

How it fits in your stack

- Add-on model or regional module - run it alongside your primary SAT/Human-Risk platform to cover AI-powered voice phishing and exec-impersonation gaps.

- Public pages advertise on-premises deployment for sensitive environments; verify scope/licensing in scoping.

- Telephony + messaging integration for real-channel delivery; dashboards expose user/team risk for export to SIEM/BI via API (vendor pages reference programmatic reporting).

Best-fit scenarios

- Executive-impersonation drills for Finance/AP, EA, treasury wire-approval workflows.

- Frontline call centers/helpdesks in banking, healthcare, insurance, telecom - high exposure to voice fraud and account-reset social engineering.

- Public sector/critical industries.

How to evaluate deepfake simulation platforms (feature checklist)

1) Realism & coverage

- Modalities: deepfake voice, deepfake video/meeting-lures.

- Narratives: chained social engineering flows (phishing email → meeting → voice follow-up).

- Localization: languages/accents for global teams.

2) Safety, ethics, and governance

- Consent-first likeness policy (executives, internal personas); auditable approvals.

- Psychological safety: visible/traceable cues; immediate private coaching; no public shaming.

3) Measurement that matters

- Risk scoring: per-user and cohort risk scores tied to real behaviors (callbacks, reporting).

- Behavior analytics: dwell time, verification-via-callback rate.

4) Platform fit & integration

- Email delivery: O365/Gmail injection (no blanket whitelisting), high deliverability.

- Identity: SSO/SCIM; dynamic targeting by org, role, high-risk cohorts.

5) Operational lift

- Admin time: campaign setup minutes vs hours.

- Automation: adaptive difficulty, auto-enrollment after failures, micro-lessons.

- Content breadth: security awareness training coverage beyond deepfakes (if you want one platform versus a specialist add-on).

Services vs. platforms: deepfake red-teaming or integrated training?

You have two models for deepfake simulation: boutique services (one-off, hyper-real drills) and integrated SAT/human-risk platforms (multichannel training with risk scoring and behavior analytics). Services prove a point; platforms change behavior at scale. Most enterprises anchor on a platform and add a yearly boutique exercise for board validation.

- If you need auditable, month-over-month risk reduction with minimal orchestration, choose an integrated SAT/human-risk platform.

- If you need a single high-theatre validation for executives, commission a boutique deepfake red-team - then feed findings back into your platform.

Practical guidance

- Anchor on platform: Use the platform to push multichannel deepfake simulation (email → meeting lure → deepfake voice) and convert outcomes into targeted coaching and risk scoring.

- Layer services sparingly: Run a boutique engagement 1-2×/year to stress-test financial workflows and executive paths.

- Procurement litmus test: If your RFP lists SSO/SCIM, SIEM/BI exports, mailbox injection, risk dashboards, you’re in platform territory.

Bottom line: Services prove; platforms improve. If you need enterprise-grade, low-overhead reduction of social-engineering risk, choose the platform as the default and keep a boutique drill in reserve if needed. Platforms like Hoxhunt will also allow you to run deepfake simulations as less frequent benchmarks.

Which platform fits your organization?

Hoxhunt deepfake simulation: integrated, enterprise-ready

Hoxhunt's deepfake simulation consists of a deepfake attack simulation that runs inside our human-risk platform - multichannel social engineering drills (phishing email → meeting lure with deepfake avatar/AI-generated voice) followed by targeted coaching and reporting that feeds risk scores.

How a typical scenario runs:

- Admin selects cohort and executive likeness (consented), chooses meeting UI (Teams/Meet/Zoom), and deploys.

- User receives a tailored phishing email → joins a mock video meeting where the cloned exec makes an urgent request (e.g., approval/credentials).

- If they engage, the flow transitions to a safe credential-harvester page; Hoxhunt then delivers a private micro-lesson and logs behavioral signals.

- Admins see objective metrics (engagement, hesitation, cues missed) in the console.

Deepfake attack simulation platforms FAQ

Should I go with a platform or boutique service?

For repeatable reduction, use an integrated security awareness training/human risk platform (unified risk scores, coaching, behavior analytics). Consider using a yearly boutique red-team drill for board validation.

What metrics actually matter?

- Reporting rate

- Verification-via-callback rate (finance/helpdesk)

- Credential-submission attempts to credential harvesters

What governance do we need (ethics, consent)?

Consent for any likeness (exec AI voice models/deepfake videos), psychological-safety cues, private remediation, and retention controls aligned to ISO 27001. Update incident response playbooks with callback/dual-control steps.

Do we need deepfake detection tools too?

Helpful as a gate on high-risk actions (e.g. real-time voice scoring). Treat detectors as signals, not truth - pair with training and policy (call-back, dual-control).

- Subscribe to All Things Human Risk to get a monthly round up of our latest content

- Request a demo for a customized walkthrough of Hoxhunt