This article is based on insights from Jeffrey Brown (CISO advisor at Microsoft) recorded live on the All Things Human Risk Management Podcast. You can listen to the full conversation here:

What is cybersecurity communications?

Cybersecurity communications is how security leaders translate risk into clear business decisions and behaviors. It uses BLUF (Bottom Line Up Front) - lead with the ask and impact - avoids jargon, relies on simple metaphors, and proves effectiveness with measurable actions, not just training completions or email opens.

Practitioner’s guidelines

- Covers every audience. CISOs communicate with everyone…from administrative assistants to the board, so messages must flex by role and context, not default to tech-speak.

- BLUF, explicitly. Bottom Line Up Front = start with your decision/ask and the business impact; only then add essentials. Executives are time-boxed - many conversations end (successfully) once the BLUF is clear.

- Make it relatable. Use the car-safety metaphor to explain layered controls - brakes (prevent), airbags/crumple zones (mitigate), recalls (respond) - so non-technical leaders understand why multiple controls exist.

- Behavior > awareness. Design communications to drive a single action (e.g. report phishing). When Jeff’s team simplified reporting to “one-click,” reports jumped from 6 to 1,000 in the next exercise.

- Right channel, right depth. Don’t default to email; pick the medium (live brief, one-pager, town hall) that fits the decision and audience.

- Repetition matters. Plan to repeat core messages 7-14 times for them to stick -especially for non-security audiences.

Anti-patterns to avoid

- Punitive comms. CEO “scold” voicemails or removing email access after a phish click backfire, suppressing incident reporting and eroding trust. Opt for recognition and rewards over shame.

- Vanity metrics. Tie metrics to business outcomes or behavior change (e.g., report-rate trend, time-to-contain).

How to build a security awareness communication plan (that drives behavior)

A security awareness communication plan should be behavior-first, year-round, and role-tailored. Use BLUF for leaders, make reporting phish one-click, reward (not punish) users, and measure a feedback loop. Keep content short, repeat key messages, and align topics to real incidents and business risk.

Effective cybersecurity communications checklist

- Define the one behavior you’ll move first. Make reporting phish dead simple (one-click) and socialize it everywhere; measure the change after launch.

- Design a real feedback loop. Don’t stop at opens; correlate messages to behaviors (e.g., report-rate trend) and use quick, 1–2-question pulse surveys to learn what resonated.

- Reward > punish. Punitive programs (CEO scold-voicemails or stripping email access) suppress reporting and don’t work; default to incentives and coaching. Read our guide to managing repeat clickers here.

- Tailor by audience and role. Tech teams need depth; non-tech teams need relevance and plain language. Accept that good comms = more work for the sender.

- Cadence: little-and-often. Replace marathon CBTs with bite-sized, engaging sessions; keep people looking forward to the next touch.

- Year-round program, not just October. Build a comprehensive mix (courses, live 1:1s, posters, demos) and keep repeating core messages.

- Repetition budget. Plan to repeat priority messages until they stick.

- Pick topics from actual risk. Let real threat reporting data drive content.

- Teach process over tricks. Use light gamification (e.g., deepfake spot-checks) to show why business process verification beats gut feel.

- Crisis comms ready. Test incident response tabletops, and in an incident state only facts, avoid speculation, and brief with BLUF.

- Give metrics a job. Tell a progress story (e.g. click-rate downtrend or reporting uptrend), tie to business impact, and cut vanity counts.

“You always start with culture… The fact is that you have to really start with the basics… do they know how to report an incident? - Jeffrey Brown”

How should you run crisis communication during a data breach or ransomware attack?

In a cyber breach scenario - whether a data breach, ransomware attack, malware infiltration, or targeted phishing - lead with BLUF (the ask + business impact), communicate only verified facts, and reference a tested incident response plan (tabletops help). Resist speculation under pressure; capture lessons and fold them back into IR for continuous improvement.

A breach day is a communication stress test. In the first internal meetings, establish situational awareness: what’s confirmed, what’s unknown, and what’s next - resisting executive pressure to speculate.

Then brief the C-suite with a BLUF executive summary (“ask + business impact”), not tooling minutiae; boards engage on risk, value, and strategy, not 30,000 vulnerabilities.

Treat customer communications as product UX: short, concrete, and human. If customer data or personal information may be involved, say exactly what you know now and what you’re doing next; promise follow-ups on a set cadence. Use digital channels intentionally (email vs. status page vs. press Q&A) - format is part of your communications infrastructure, and the wrong medium undermines trust.

Regulator-facing updates should translate cybersecurity threats into operational risk and business impact (“claims processing downtime = fines/minute”), not information technology jargon. Metrics need a job - trend lines (e.g. phishing attacks click-rate down, reporting up) beat “5B attacks blocked.” That discipline also stabilizes media coverage and public relations efforts for reputation repair.

Post-containment, close the loop publicly only when facts are solid. Internally, explain which security practices and security controls held, where human error or attack surface design contributed, and what changes are funded against explicit business goals. Storytelling with real examples helps people remember why the control matters.

How to write the executive summary for cyber incidents

Open with BLUF - your decision/ask and the business impact - then list only verified facts, current risk to revenue/operations, and metrics with a job (progress, not vanity counts). Skip tooling detail; boards want risk, value, strategy. State what’s unknown, avoid speculation, and give a time for the next update.

Anatomy of a high-signal exec summary

- BLUF header: the ask/decision + explicit business impact (e.g., downtime, fines, customer implications). Many exec conversations end successfully once the BLUF is clear (“make it happen”).

- Facts only: what is confirmed now; label unknowns; no speculation under pressure.

- Business risk framing: translate issues into operational impact; avoid tool tutorials in board/C-suite forums.

- Metrics with a job: trend behaviors that change decisions (e.g., report-rate up, containment time down), not “billions blocked.”

- Next step & owner: precise action, accountable owner, and next update time.

What metrics belong (and why)

- Behavioral movement: e.g. one-click phish reporting drove submissions from 6 → 1,000 - evidence of real vigilance, not checkbox awareness.

- Operational progress: containment/eradication time, not total “attacks blocked.”

Common traps to avoid

- Speculation: answering beyond the facts to satisfy pressure. Label unknowns instead.

- Teaching tools to boards: keep to risk, value, and strategy; skip the acronyms.

- Over-detailing: if the BLUF earns a “make it happen,” stop; provide technical appendices only on request.

Metrics with a job: proving behavior change

Give every metric a job- either tell a clear progress story or drive a decision. Trend results over time, pair quantitative + qualitative signals, and always answer “so what?” Ditch vanity counts (e.g., “billions blocked”) and use behavior-led evidence like phish reporting rate and click-rate downtrends to secure action.

What to measure (behavioral + operational)

- Behavioral: phish reporting rate after you simplify reporting; click-rate trend.

- Operational: time to contain/eradicate vs. last incident; aggregate more than a month to show real movement.

Make metrics decision-ready

- Run the “so what?” test on each chart; if it doesn’t influence a decision, rework it or drop it.

- Frame the outcome in business terms (e.g. claims processing downtime → regulator fines per minute) instead of tool status.

- Combine quantitative targets with qualitative context so audits don’t debate colors without evidence.

Below you can see what sort of outcomes we track at Hoxhunt. The most critical metric here is real threats reported - this shows that training efforts are reducing real-word attacks.

From awareness to culture: psychological safety, not punishment

Security culture changes when people feel safe to report mistakes and see their actions rewarded. Jeffrey Brown advises replacing punitive tactics with psychological safety, clear processes, and year-round reinforcement.

Why punishment backfires

Brown’s real-world examples - critical CEO voicemails and proposals to remove email access - created bad days at work that taught employees to hide mistakes. That’s the opposite of what you want during real incidents. Culture moves forward when reporting is encouraged, not when “clickers” are shamed.

Build psychological safety (what to do)

Make reporting effortless and recognize it publicly. Brown’s teams handed out small phishing trophies and praise; peers started asking about them, spreading the message organically.

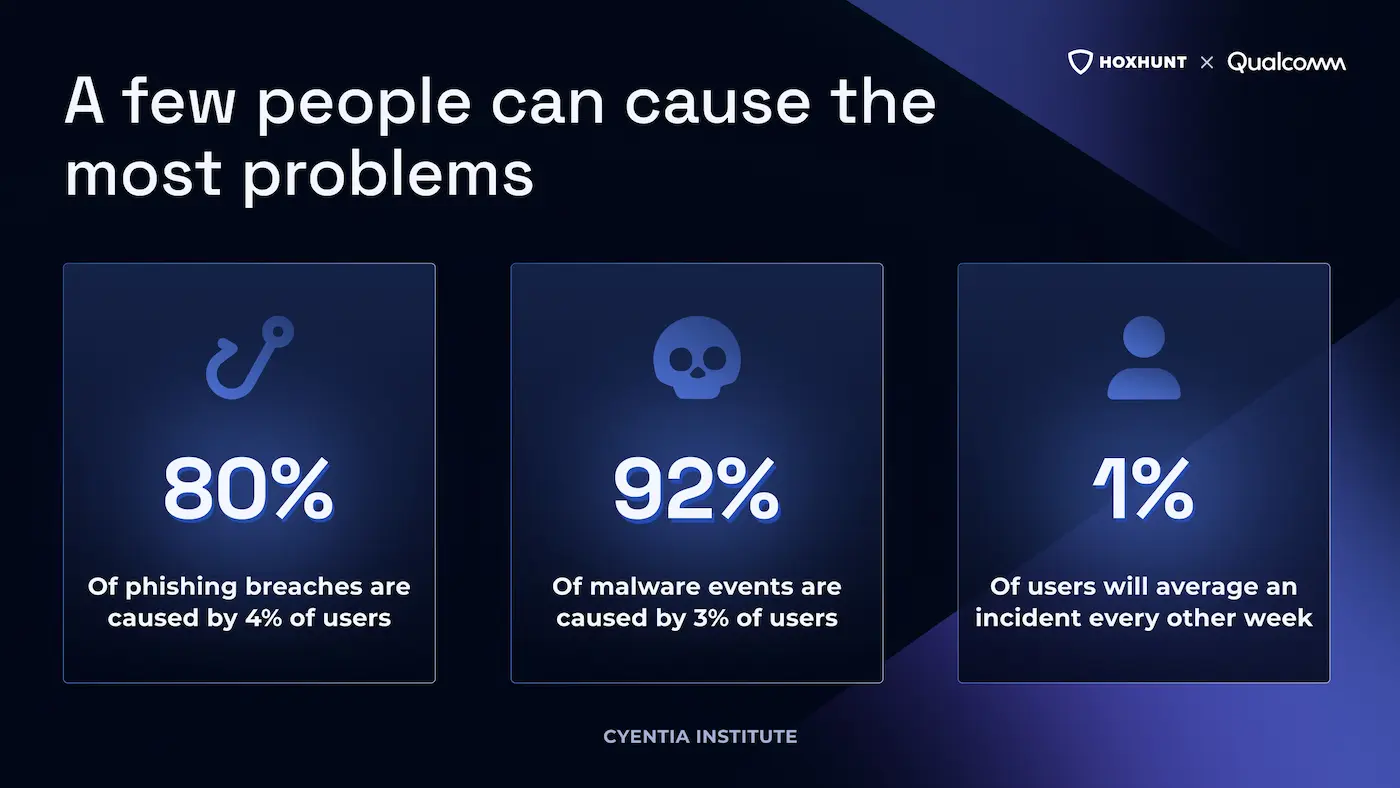

How Qualcomm turned their 1,000 highest-risk employees into their top performers

Qualcomm’s CSO50 Award-winning "Worst-to-First Employee Phishing Performance" initiative transformed their 1,000 highest-risk employees into model cyber citizens via enrollment into Hoxhunt.

Combining AI with behavioral science and game mechanics, we helped Qualcomm finally reach employees who’d been deemed unreachable via traditional SAT tools’ dry, punishment-based curriculum.

AI & deepfakes: process verification over “trust the voice”

Assume deepfake technology is in play. Do not treat voice as a biometric; replace “trust the voice” with business-process verification - dual control and a call-back to a known number - especially for urgent payment or data requests.

Why voice can’t be trusted anymore

Jeffrey Brown warns that voice authentication is no longer a good biometric; rapid advances in voice generation make caller identity unreliable for high-risk actions. Treat this as a standing assumption in your comms and controls.

Train the process (not the hunch)

When “the CFO” calls for an urgent transfer, what happens next is a business process: pause, verify via a known channel, and require dual approval before funds move or sensitive data is released. Bake this into scripts and drills.

Use demos that stick

Brown’s team runs live deepfake demos (e.g. side-by-side videos, “which one is fake?”) and gamified spot-checks. People are often surprised - which is the point: show convincingly that perception can’t beat process.

Simulate deepfake attacks with Hoxhunt

Our training scenario measures your readiness against AI-powered threats. Users who go through a simulated scenario understand that phishing is not just a word in a message; it can also deceive their eyes and ears.

We offer a deepfake attack simulation that’s fully customizable to your context. We’ll create a deepfake of one of your executives likeness and voice. The attack scenario plays out in a fake video meeting, matching what you normally use: Microsoft Teams, Google Meet, Zoom. You select the recipients that would benefit most from the training.

Cadence & micro-learning: keeping security top-of-mind all year

Move from one-and-done courses to bite-sized, frequent touchpoints. Make the program year-round, tie topics to real incidents, and keep sessions engaging so people actually look forward to them. Expect to repeat core messages until stick - especially for non-security audiences.

Design a little-and-often cadence

Short, recurring moments beat a single marathon CBT. Use quick lessons, posters, brief live sessions, and lightweight campaigns rather than long, infrequent modules. Keep it comprehensive but digestible.

Make it engaging (so people want the next one)

Jeffrey Brown describes storytelling formats where employees look forward to the next installment - proof that engagement rises when content is human, relevant, and not overly long.

Repeat, on purpose

Plan repetition; people need multiple exposures before behavior changes. Brown advises repeating key messages 7-14 times - and more when audiences are outside security.

Let incident data set topics

Use what you’re actually seeing to drive micro-campaigns the next month. That keeps content relevant to risk, not generic.

Avoid fatigue

Don’t blast constant alerts; excessive volume creates “security fatigue.” Keep signals focused, relevant, and spaced so important messages don’t get lost.

“This is a program that needs to persist over time, and you’re repeating messages so people understand our policies… little bite-sized training works really good too. - Jeffrey Brown”

Security champions & peer influence

Security champions multiply a small team’s reach. Identify engaged people in each department to act as liaisons - answering coworkers’ questions, relaying concerns to security, and localizing messages. Pair this with visible recognition (e.g., phishing trophies), which sparks peer-to-peer conversations and turns awareness into culture.

What champions actually do

Champions serve as trusted departmental contacts: they answer day-to-day security questions, bring issues back to the core team, and personalize guidance for their unit. This peer-to-peer model has gained traction in mid-market and enterprise programs because it scales influence without adding headcount.

What champions actually do

Champions serve as trusted departmental contacts: they answer day-to-day security questions, bring issues back to the core team, and personalize guidance for their unit. This peer-to-peer model has gained traction in mid-market and enterprise programs because it scales influence without adding headcount.

Lightweight structure (keep overhead low)

Successful programs give champions extra training and simple incentives, then let them act as a two-way conduit - fielding questions and feeding local context back to security so messages feel relevant.

How to know it’s working

Watch for behavioral signals: reporting rates rise in the champions’ teams; questions route through champions before becoming tickets; and “how we do things here” becomes: report, then delete - not hide mistakes.

Storytelling that lands: the car-safety metaphor for layered controls

Use storytelling to explain security controls. Brown’s go-to: car safety.

- Brakes = prevention

- Airbags/crumple zones = mitigation

- Recalls = incident response.

You don’t buy a car for airbags - but without them you can’t drive fast safely. This reframes “why so many tools?” into business risk and resilience.

Why this metaphor works with execs

Leaders already understand cars - no technical terms required. The mapping makes layered defenses intuitive, avoids acronym soup, and keeps the focus on risk, value, strategy rather than product names. It’s memorable enough to reuse in an executive summary or board slide without extra briefing.

How to present it (in two sentences)

“Bottom line up front: fund [control] so the business can ‘drive faster’ without increasing crash risk. If not, the next ‘crash’ (outage, fines, downtime) costs more than the control.” Keep it under 30 seconds; if you get a “make it happen,” stop there.

“You’re not gonna run out and go buy a Porsche because it’s got excellent breaks… So we have breaks that are kind of like the prevention… We have airbags and crumple zones as a mitigation… and then… we have a incident response process called recalls.” - Jeffrey Brown

Tabletops & prep: stress-testing incident comms before you need them

Preparation beats panic. Jeffrey advises validating a tested incident response plan early - run tabletops that rehearse roles, decisions, and facts-only updates. Incidents can strike anytime (even before a CISO’s start date), so practice BLUF briefings and capture lessons to feed back into the plan.

- Make tabletops realistic (and early): Tabletops aren’t paperwork; they’re rehearsal. Brown’s first move in new roles was to inspect and test IR plans - because one incident landed a week before his start date. Run scenarios that force time-boxed decisions and executive BLUF updates.

- Drill “facts-only” comms under pressure: Teach teams to label what’s known vs. unknown, and to refuse speculation - even when senior leaders push for it. This muscle memory prevents rumor spirals on breach day.

- Close the loop into preparation: After every exercise, capture what went right/wrong - including wins that happened by chance - and update plans/playbooks so the next run starts stronger.

Soft skills that carry the room: active listening, presentation, nonverbal

Jeffrey Brown stresses that “soft skills” are hard requirements: practice your presentation skills (it’s muscle memory), lead internal meetings with active listening (two ears, one mouth), and watch nonverbal communication to read the room. These skills turn BLUF briefs into fast decisions and prevent spiral-into-speculation moments.

Practice out loud (it’s a skill, not a trait)

Brown says he wasn’t “born” a natural presenter; repetition made standing up on short notice feel comfortable. Treat exec briefings like reps - tighten the BLUF, rehearse the opening sentence, and stop once you get the decision.

Lead with listening

“Two ears, one mouth” isn’t a cliché here. Brown frames active listening as the #1 communication tool - ask short questions, let stakeholders explain how their part of the business works, and echo back what you heard before proposing controls.

Read the room (and adjust)

In tense internal meetings, watch nonverbal communication - posture, eye contact, side-talk—then slow down or clarify. If you see confusion, restate the BLUF in plainer words; if you see agreement, stop explaining and move to the decision.

Medium × soft-skill combo

Slides and one-pagers help but delivery matters more. Brown favors short, visual aids to steer the conversation, plus human delivery: concise words, steady tone, and clear asks. That mix earns trust faster than an acronym-heavy deck.

Listen to full podcast 🎧

Want to hear the full conversation with Jeffrey Brown? Watch/listen to the podcast on all platforms here, or listen via the player below.

What was covered in this episode:

- How to use Bottom Line Up-Front (BLUF) to get faster decisions from executives and the board - and when not to.

- Turning “security talk” into business outcomes: mapping risk to revenue, resilience, and cost.

- Metrics that matter: designing KPIs that show behavior change, not just completion rates.

- Building a non-judgmental reporting culture (and why “Report > Don’t Click” works).

- Instant feedback loops: faster reinforcement without punishment in phishing drills.

- Story-first, stat-supported narratives that land across technical and non-technical audiences.

- Practical cadences and mediums: what to send to execs, managers, and the whole org and how often.

- Using analogies (brakes & airbags) to make layered defense memorable and actionable.

Cybersecurity communications FAQs

What’s the fastest way to brief executives during an incident?

Use BLUF: start with the ask/decision and business impact, then only the verified facts. Skip tooling; many exec conversations conclude once the BLUF is clear (“make it happen”).

Do phishing simulations still make sense?

Yes - treat them as training, not “gotchas.” Even pros can click under time pressure (e.g., on mobile), and attacks are getting more convincing; the goal is to keep people on their toes without punishment.

Should we punish users who click?

Avoid punitive tactics. They create “bad days at work,” suppress reporting, and backfire culturally; coach and reward instead.

What do boards actually want from the CISO?

Risk, value, strategy - not tool tutorials or tons of slides. Keep it concise and business-framed.

What makes a good metric for the board?

A metric with a job: show progress or drive a decision (e.g. report-rate up, time-to-contain down).

- Subscribe to All Things Human Risk to get a monthly round up of our latest content

- Request a demo for a customized walkthrough of Hoxhunt