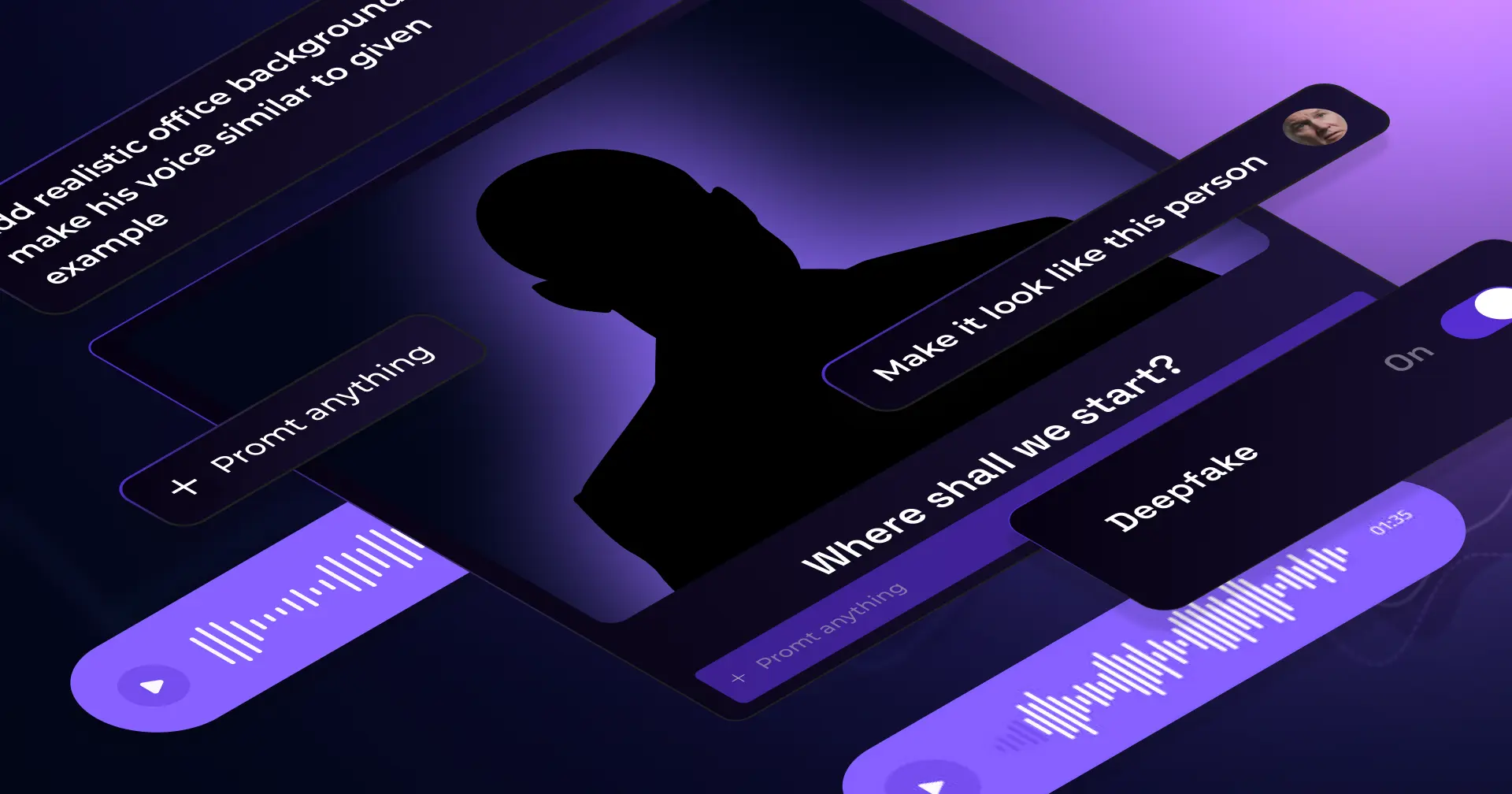

What is deepfake phishing simulation software?

Deepfake phishing simulation software is training tech that safely rehearses AI-generated voice and video social-engineering attacks. Employees receive a phishing email that leads to a fake Teams/Meet/Zoom call, where an avatar (likeness + voice) urges an action; safe fails trigger instant micro-training, building resilience against advanced fraud.

Platforms like Hoxhunt support multi-channel environments and can deliver either targeted benchmarks or broad awareness modules.

Key capabilities you should expect

- Multi-step, multi-channel flow (email + live “call” simulation) to reflect real attack paths.

- Realistic avatars (likeness + voice) with consent-first production using vetted providers.

- Instant, in-context micro-training when a risky action is taken - no public shaming, fast feedback.

- Responsible realism (e.g., light glitches/lag to support a “bad connection” pretext) without crossing psychological-safety lines.

How it differs from traditional phishing simulations

- Trains voice/video-based persuasion (not just text/email).

- Emphasizes reflexes over pixel-peeping (assume fakes can look real; scrutinize urgency and request).

- Aligns to high-value processes (payments, access, approvals) where deepfakes deliver real ROI for attackers.

Inside Hoxhunt’s deepfake simulation workflow

Hoxhunt's deepfake phishing simulation mirrors real attacks: a phishing email routes the user to a fake Teams page, a cloned-voice avatar urges an urgent action, and clicking the chat link triggers a safe fail with instant micro-training.

Step-by-step flow (multi-channel simulation)

- Phishing email (entry point): Users receive a realistic phishing simulation email (Outlook/Gmail) prompting a “quick call.” Hoxhunt's reporting button can capture reports directly from the inbox.

- Attacker-controlled “meeting” (landing page): Clicking opens a browser page that convincingly mimics Microsoft Teams / Google Meet / Zoom - mic and camera can be enabled so it feels live. Links can display as legit while redirecting under the attacker’s control.

- AI-generated voices + avatar (deepfake voice & video): A scripted avatar (likeness + voice cloning) urges an urgent action (e.g., open a “SharePoint” link). Light lag/glitch effects support the “bad connection” pretext without overstepping psychological safety.

- User action → safe fail (deepfake fraud simulation): Clicking the chat link triggers a simulation fail and instant micro-training. This is how Hoxhunt's security awareness training coaches away risky behavior - no public shaming, just fast, contextual feedback.

- Threat reporting path (before failure): If the user suspects AI-generated voice phishing and backs out, they can report the original email via the Hoxhunt button; it still counts as a successful report if done before failing.

- Admin visibility & outcomes: Admins view campaign metrics in real time and can trigger behavior-based nudges or training packages for users who exhibit risky behavior.

Watch our live demo of Hoxhunt's deepfake simulation.

KPIs to track (beyond “click rate”)

Why the realism works but stays responsible

- Web environments closely mirror Teams/Meet/Zoom (familiar communication channels), including camera/mic prompts and domain styling.

- The experience is “real but responsible” - brief, targeted, and followed by micro-training to build behavioral resilience against deepfake scams.

Where this fits your security awareness program: run as targeted phishing simulation exercises (benchmark high-risk business processes) or as part of SAT training packages to reduce human cyber risk across the org.

Below you can walk through our deepfake simulation training at your own pace and see how it works under the hood.

Realism without risk: consent, ethics, and safety

Security teams need realistic simulations without “gotchas.” That's why Hoxhunt’s deepfake training is consent-only, pre-scripted, brief, and delivered in an attacker-style fake meeting - ending with micro-training instead of shame. Reporting happens from the original email, and rollouts are targeted one-offs with spacing, followed by additional training for risky users.

1) Consent & legal guardrails (non-negotiable)

- Contractual cover: deepfake amendment for customers (or deepfake language in the main contract).

- Customer-obtained consent: the customer confirms the likeness/voice owner has consented (one-line confirmation is sufficient).

- Providers & data use: voice via ElevenLabs; video via Synthesia/HeyGen; agreements specify no storage or model training on customer content.

2) “Real but responsible” employee experience

- No non-consensual cloning; scenarios are pre-scripted, not open-ended chats.

- Short, respectful interactions (no prolonged cat-and-mouse); follow with micro-training instead of blame.

- Clicking link leads to “oops - that could have been dangerous” learning moment.

3) Designed limits that keep risk low

- Environment, not apps: simulations run in browser-based look-alikes of Teams/Meet/Zoom; we can add light lag/glitch to sell the pretext, but we don’t escalate intensity.

- Clear end state: the chat-link click is the defined “fail,” which triggers instant coaching; users can exit safely before clicking.

- Reporting path: employees report from the original email (not from the browser); a report still counts as success if made before failing.

4) Cadence and content strategy (avoid training plateaus)

- Run as targeted one-offs (e.g., finance, EA, approvers), let the buzz settle, then re-test after regular training refreshers.

- Tie additional training to user behavior (fails, risky clicks) rather than pushing long-form training modules; keep ongoing training lightweight with ready-made training modules.

Use cases & rollout: where deepfakes fit in your phishing campaigns

Use deepfake simulations where real-world threats hurt most: executive/BEC approvals, vendor fraud, and IT support pretexts. Run a targeted one-off benchmark, space it, then assign additional training and re-test. Measure reporting rate, drop-off before action, and final fail rate to prove behavior change across your phishing campaigns and simulation programs.

High-impact use cases (pair with realistic scenarios)

- Executive impersonation / BEC approvals. Cloned “CEO/CFO” on a quick call pushes an urgent wire or bank-detail change - classic business email compromise pattern. Outcome can be a chat-link to a credential harvester.

- Vendor fraud in finance. Pretexted “updated remit info” or “invoice about to bounce” delivered via email + call; train approvers and AP analysts.

- IT support scams. “Reset now / verify device” pushed in a fake Teams/Meet/Zoom room; payload is a “SharePoint/SSO” chat link.

- Multi-channel pretexting. Email ↔ call sequencing boosts credibility; include voice messages in your narrative to mirror AI-powered voice phishing.

Who to target first (and why)

- Finance, executive assistants, approvers → highest monetary risk from Fraud attempts/BEC.

- IT / service desk → credential theft and policy bypass pressure.

- Broader org for awareness → short training modules while building baseline recognition.

Rollout pattern we recommend

- Targeted benchmark (one-off). Launch to a defined cohort.

- Space it. Let buzz cool, deliver micro-training or ready-made training modules to those who struggled.

- Re-test. New scenario, same process; show improvement over time in measurable behavior (↑ reports, ↑ safe drop-offs, ↓ fails).

- Close the loop. Use analytics to trigger additional training and keep ongoing training lightweight - avoid long-form defaults that cause training plateaus.

Getting started: deployment, consent & customization

Set up a deepfake phishing simulation program in weeks, not months: pick a high-risk cohort and scenario, secure consent, capture a short voice sample, generate the avatar, and launch a realistic simulation (email ➝ fake video meeting).

Step-by-step rollout

Choose the scenario & cohort

Start where business email compromise or approvals hurt most (finance/AP, EAs, approvers; IT for credential resets). Keep the narrative anchored to real-world threats.

Consent & contract

You confirm likeness/voice consent. No model training/storage on your content.

Capture assets

Record 1–2 minutes of the subject’s voice (or use approved samples) and supply a still/video + script. Two avatar options: fully consented capture (most realistic) or photo + short voice (lower effort).

Production window

Standard turnaround ~5 business days after voice received; avatar generation ~1 day.

Launch the drill (realistic but safe)

Send a simulated phishing email that routes to a browser-based Teams/Meet/Zoom look-alike. The cloned-voice avatar applies urgency and drops a “SharePoint/SSO” chat link (the safe fail).

Define success before go-live

- Reporting rate (from inbox): report via the original email counts as success.

- Drop-off before action: users who exit the fake call without clicking.

- Final fail rate: chat-link clicks (endpoint).

Close the loop with training

Trigger micro-training/training modules for risky user behavior.

Re-test for proof

Space out simulations , swap in a fresh scenario, and re-run to show reductions in phishing click and behavior change across phishing campaigns.

Deepfake phishing simulation vendor comparison table

- Subscribe to All Things Human Risk to get a monthly round up of our latest content

- Request a demo for a customized walkthrough of Hoxhunt